Çelebi, Fatma

Loading...

Profile URL

Name Variants

Celebi, Fatma

Fatma Çelebi

Çelebi, Fatma

Fatma Çelebi

Çelebi, Fatma

Job Title

Arş. Gör.

Email Address

fatma.celebi@agu.edu.tr

Main Affiliation

02. 04. Bilgisayar Mühendisliği

Status

Current Staff

Website

ORCID ID

Scopus Author ID

Turkish CoHE Profile ID

Google Scholar ID

WoS Researcher ID

Sustainable Development Goals

3

GOOD HEALTH AND WELL-BEING

2

Research Products

This researcher does not have a Scopus ID.

This researcher does not have a WoS ID.

Scholarly Output

5

Articles

4

Views / Downloads

350/244

Supervised MSc Theses

0

Supervised PhD Theses

1

WoS Citation Count

15

Scopus Citation Count

19

WoS h-index

2

Scopus h-index

2

Patents

0

Projects

0

WoS Citations per Publication

3.00

Scopus Citations per Publication

3.80

Open Access Source

2

Supervised Theses

1

Google Analytics Visitor Traffic

| Journal | Count |

|---|---|

| Biomedical Signal Processing and Control | 1 |

| Concurrency and Computation-Practice & Experience | 1 |

| International Journal of Imaging Systems and Technology | 1 |

| Oral Radiology | 1 |

Current Page: 1 / 1

Scopus Quartile Distribution

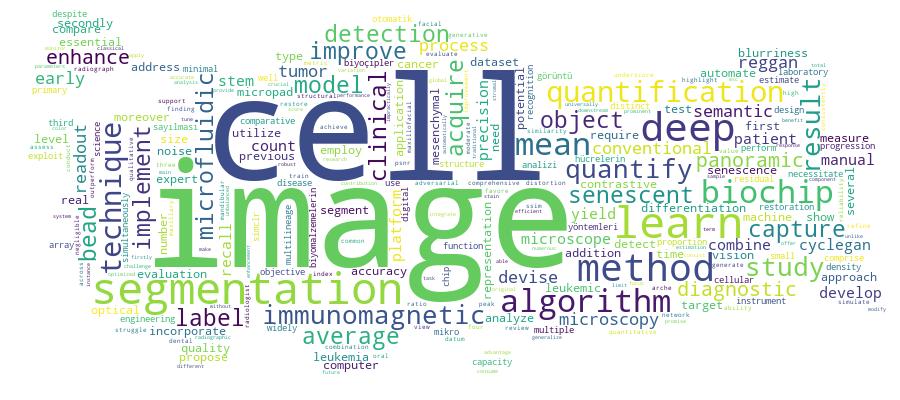

Competency Cloud

Scholarly Output Search Results

Now showing 1 - 5 of 5

Article Enhancing Diagnostic Quality in Panoramic Radiography: A Comparative Evaluation of GAN Models for Image Restoration(Wiley, 2025) Kolukisa, Burak; Celebi, Fatma; Ersu, Nihal; Yucel, Kemal Selcuk; Canger, Emin MuratPanoramic imaging is a widely utilized technique to capture a comprehensive view of the maxillary and mandibular dental arches and supporting facial structures. This study evaluates the potential of the Generative Adversarial Network (GAN) models-Pix2Pix, CycleGAN, and RegGAN-in enhancing diagnostic quality by addressing combinations of common image distortions. A panoramic radiograph data set was processed to simulate four types of distortions: (i) blurriness, (ii) noise, (iii) combined blurriness and noise, and (iv) anterior-region-specific blurriness. Three GAN models were trained and analyzed using quantitative metrics such as the peak signal-to-noise ratio (PSNR) and the structural similarity index measure (SSIM). In addition, two oral and maxillofacial radiologists conducted qualitative reviews to assess the diagnostic reliability of the generated images. Pix2Pix consistently outperformed CycleGAN and RegGAN, achieving the highest PSNR and SSIM values across all types of distortions. Expert evaluations also favored Pix2Pix, highlighting its ability to restore image accuracy and enhance clinical utility. CycleGAN showed moderate improvements in noise-affected images but struggled with combined distortions, while RegGAN yielded negligible enhancements. These findings underscore its potential for clinical application in refining radiographic imaging. Future research should focus on combining GAN techniques and utilizing larger datasets to develop universally robust image enhancement models.Article Deep-Learning Detection of Open-Apex Teeth on Panoramic Radiographs Using YOLO Models(Springer, 2025) Edik, Merve; Celebi, Fatma; Cukurluoglu, AykaganObjectivesThe use of deep learning in detecting teeth with open apices can prevent the need for additional radiographs for patients. The presented study aims to detect open-apex teeth using You Only Look Once (YOLO)-based deep learning models and compare these models.MethodsA total of 966 panoramic radiographs were included in the study. Open-apex teeth in panoramic radiographs were labeled. During the labeling process, they were divided into 6 classes in the maxilla and mandible, namely incisors, premolars, and molars. AI models YOLOv3, YOLOv4, and YOLOv5 were used. To evaluate the performance of the three detection models, both overall and separately for each class in the test dataset, precision, recall, average precision (mAP), and F1 score were calculated.ResultsYOLOv4 achieved the highest overall performance with a mean average precision (mAP) of 87.84% at IoU (Intersection over Union) 0.5 (mAP@0.5), followed by YOLOv5 with 85.6%, and YOLOv3 with 84.46%. Regarding recall, YOLOv4 also led with 90%, while both YOLOv3 and YOLOv5 reached 89%. Moreover, the F1 score was the highest for YOLOv4 (0.87), followed by YOLOv3 (0.86) and YOLOv5 (0.85).ConclusionsIn this study, YOLOv3, YOLOv4, and YOLOv5 were evaluated for the detection of open-apex teeth, and their mAP, recall, and F1 scores exceeded 84%. Deep learning-based systems can provide faster and more accurate results in the detection of open-apex teeth. This may help reduce the need for additional radiographs from patients and aid dentists by saving time.Doctoral Thesis Biyoçipler için Mikro Biyomalzemelerin ve Hücrelerin Görüntü İşleme Yöntemleri ile Otomatik Olarak Sayılması ve Analizi(Abdullah Gül Üniversitesi, Fen Bilimleri Enstitüsü, 2023) Çelebi, Fatma; İçöz, KutayQuantification of tumor cells is essential for early cancer detection and progression tracking. Multiple techniques have been devised to detect tumor cells. In addition to conventional laboratory instruments, several biochip-based techniques have been devised for this purpose. Our biochip design incorporates micron-sized immunomagnetic beads and micropad arrays, necessitating automated detection and quantification not only of cells but also of the micropads and immunomagnetic beads. The primary function of the biochip is to simultaneously acquire target cells with distinct antigens. As a readout technique for the biochip, this study devised a digital image processing-based method for quantifying leukemia cells, immunomagnetic beads, and micropads. Images were acquired on the chip using bright-field microscopy with image objectives of 20X and 40X. Conventional image processing methods, machine learning methods, and deep learning methods were used to analyze the images. To quantify targets in the images captured by a bright-field microscope, color- and size-based object recognition and machine learning-based methods were first implemented. Secondly, color- and size-based object detection and object segmentation methods were implemented to detect structures in bright-field optical microscope images acquired from the biochip. Third, segmentation of the minimal residual disease (MRD) using deep learning. Implemented biochip images comprised of leukemic cells, immunomagnetic beads, and micropads. Moreover, mesenchymal stem cells (MSCs) are stem cells with the capacity for multilineage differentiation and self-renewal. Estimating the proportion of senescent cells is therefore essential for clinical applications of MSCs. In this study, a self-supervised learning (SSL)-based method for segmenting and quantifying the density of cellular senescence was implemented, which can perform well despite the small size of the labeled dataset.Article Citation - WoS: 9Citation - Scopus: 12Deep Learning Based Semantic Segmentation and Quantification for MRD Biochip Images(Elsevier Sci Ltd, 2022) Celebi, Fatma; Tasdemir, Kasim; Icoz, KutayMicrofluidic platforms offer prominent advantages for the early detection of cancer and monitoring the patient response to therapy. Numerous microfluidic platforms have been developed for capturing and quantifying the tumor cells integrating several readout methods. Earlier, we have developed a microfluidic platform (MRD Biochip) to capture and quantify leukemia cells. This is the first study which employs a deep learning-based segmentation to the MRD Biochip images consisting of leukemic cells, immunomagnetic beads and micropads. Implementing deep learning algorithms has two main contributions; firstly, the quantification performance of the readout method is improved for the unbalanced dataset. Secondly, unlike the previous classical computer vision -based method, it does not require any manual tuning of the parameters which resulted in a more generalized model against variations of objects in the image in terms of size, color, and noise. As a result of these benefits, the proposed system is promising for providing real time analysis for microfluidic systems. Moreover, we compare different deep learning based semantic segmentation algorithms on the image dataset which are acquired from the real patient samples using a bright-field microscopy. Without cell staining, hyper-parameter optimized, and modified U-Net semantic segmentation algorithm yields 98.7% global accuracy, 86.1% mean IoU, 92.2% mean precision, 92.2% mean recall and 92.2% mean F-1 score measure on the patient dataset. After segmentation, quantification result yields 89% average precision, 97% average recall on test images. By applying the deep learning algorithms, we are able to improve our previous results that employed conventional computer vision methods.Article Citation - WoS: 6Citation - Scopus: 7Improved Senescent Cell Segmentation on Bright-Field Microscopy Images Exploiting Representation Level Contrastive Learning(Wiley, 2024) Celebi, Fatma; Boyvat, Dudu; Ayaz-Guner, Serife; Tasdemir, Kasim; Icoz, KutayMesenchymal stem cells (MSCs) are stromal cells which have multi-lineage differentiation and self-renewal potentials. Accurate estimation of total number of senescent cells in MSCs is crucial for clinical applications. Traditional manual cell counting using an optical bright-field microscope is time-consuming and needs an expert operator. In this study, the senescence cells were segmented and counted automatically by deep learning algorithms. However, well-performing deep learning algorithms require large numbers of labeled datasets. The manual labeling is time consuming and needs an expert. This makes deep learning-based automated counting process impractically expensive. To address this challenge, self-supervised learning based approach was implemented. The approach incorporates representation level contrastive learning component into the instance segmentation algorithm for efficient senescent cell segmentation with limited labeled data. Test results showed that the proposed model improves mean average precision and mean average recall of downstream segmentation task by 8.3% and 3.4% compared to original segmentation model.